Overengineering a Blog

This post describes the infrastructure of this blog before a more recent rewrite, where I removed all the cool features described herein. The blog is now made with eleventy and doesn’t have interactive code blocks. Ultimately, it wasn’t worth the upkeep.

I talked a little already about why I wanted to build a blog with interactive code, but I have to be honest: part of why I wanted to do this, and particularly why I chose not to use a prepackaged solution like Monaco Editor, was to see if I could do it. Compilers and text editors are two computing topics I find oddly fascinating. The chance to combine them in a practical and narrowly scoped project was alluring.

My goal was to build something that would:

- be lighter and feel more native than Monaco Editor (no custom scrollbar),

- have perfect syntax highlighting (no regex approximations),

- expose compiler diagnostics and type information of identifiers,

- be linkable to other code blocks so one can reference symbols defined in another,

- be able to reference outside libraries and other arbitrary unseen code.

I expressly did not intended to build:

- a good code editor,

- something so complex that I never finish and publish this blog.

I knew I wasn’t setting out to build an editor. I was building a learning tool, 90% of whose value is not in editing—the type info and diagnostics tooltips were far more important. So I think choosing not to use Monaco was a reasonable decision after all. Having language support and great syntax highlighting, which Monaco could have provided better than I could do myself, was crucially important, but pushing an editor fully featured enough to power VS Code onto someone who just came to read an article seemed like an irresponsible use of your bandwidth.

In other words, I had a moral imperative to half-ass an editor to lower your data usage.

The Editor Itself

The editing experience was the least important aspect of the code blocks, but I also knew from a previous (unfinished) side project that the UI piece should be pretty easy with the help of Slate. Slate is capable of handling some complex editing scenarios; it takes care of the nitty-gritty of working with contenteditable for you, and I assumed building components to render colors and tooltips would be simple. That’s mostly how it worked out. I give the Slate Editor a model that annotates spans of text with metadata (these annotated spans are called Decorations in Slate), a function that renders a React component for a given span of text, and a simple value and onChange pair. In a greatly simplified version, this gist is:

function InteractiveCodeBlock(props: InteractiveCodeBlockProps) {

const [value, setValue] = useState(props.initialValue);

<Editor

value={value}

onChange={({ value }) => setValue(value)}

decorateNode={props.tokenize}

renderMark={props.renderToken}

/>;

}

where

tokenizetakes the full text and returns a list of Decorations, tokens that describe how to syntax highlight each word, where to make compiler quick info available, and to underline in red; and,renderTokengets passed one of those words with its descriptive tokens, and renders that word with the correct syntax highlighting, and perhaps with tooltips attached to show information from the compiler.

My real code is much more complex, but mostly due to performance hacks. This is a good representation of the actual architecture.

The biggest factor that makes this example an oversimplification is this: because in TypeScript, changing something on line 1 can change the state of something on line 10, I need to analyze the full text, every line, on every change. But if I use the new program state to build a new list of Decorations for the whole code sample, then every node (basically a React component for every word and punctuation mark) would re-render on every change. My solution involves a lot of caching, hashing, and memoization. I analyze the whole document on every change (well, that’s an oversimplification too—I’ll get there), then break the result down into tokens by line, and cache that. If I can tell that a whole line of new tokens are identical to a whole line of existing tokens by comparing line hashes, I can just return the existing Decoration objects. Slate will use this strict equality to bail out of re-rendering those lines, which removes a noticeable amount of latency on each keystroke.

Static Rendering

I’m telling this piece of the story a little out of order, because I think it’s important context for everything that follows. I didn’t initially plan for this, but as I began to realized how much library code would be necessary to achieve editable code blocks with language support (the TypeScript compiler is larger than the rest of my blog’s assets combined), I once again felt a moral imperative to respect your data usage. Generally speaking, people don’t expect to have to download a compiler in order to read a blog post.

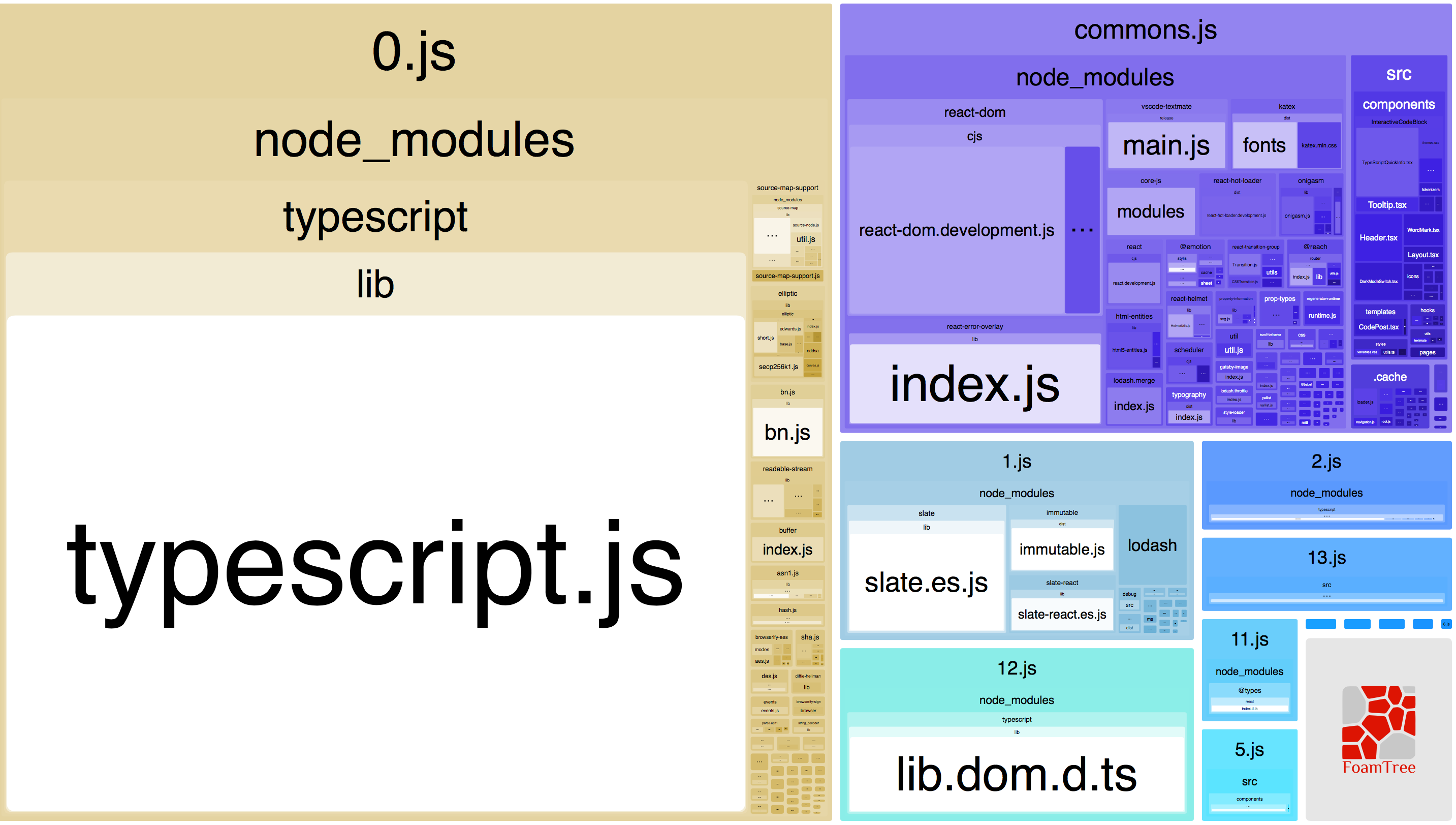

webpack-bundle-analyzer shows TypeScript’s size relative to everything else.

I also realized that none of the really heavy pieces are necessary until the moment a reader starts editing a code sample. I could analyze the initial code at build time, generate syntax highlighting information, type information, and compiler diagnostics as static data, inject it with GraphQL, and reference it during render, all for a fraction of the weight of the compiler and syntax highlighter itself.

Gatsby made this fairly easy. During a build, I search the Markdown posts for TypeScript code blocks, run the static analysis on their contents, and inject the results into the page context (which becomes available as a prop in the page template component).

Only when a reader clicks the edit button on a code block are the heavier dependencies downloaded, and the static analysis starts running in the browser. The huge majority of the analysis code is shared between the build-time process and the browser runtime process.

The Language Service

If you’ve ever used the TypeScript compiler API, one of the first things you learn is that every important piece of the compiler interacts with the outside world via abstractions called hosts. Rather than calling into the file system directly with the fs module, the compiler asks for a ts.CompilerHost object that defines how to readFile, writeFile, and otherwise interact with its environment.

This makes it simple to provide an in-memory file system to the compiler such that it has no trouble running in the browser. “File system” is an overstatement for the code I wrote, as the crucial functions are basically just aliases for Map methods:

function createSystem(initialFiles: Map<string, string>): ts.System {

const files = new Map(initialFiles);

return {

...otherStuffNotImportantToExample,

getCurrentDirectory: () => "/",

readDirectory: () => Array.from(files.keys()),

fileExists: (fileName) => files.has(fileName),

readFile: (fileName) => files.get(fileName),

writeFile: (fileName, contents) => {

files.set(fileName, contents);

},

};

}

Linked Code, Library Code, and Imaginary Code

The “source files” you see in the code blocks get pulled from Markdown source files. There’s a lot of code you don’t see, though, like the default library typings, as well as typings for other libraries I want to reference, like React. In the browser, an async import with Webpack’s raw-loader does the trick, and ensures the lib files don’t get downloaded until they’re needed:

async function getReactTypings(): Promise<string> {

const typings = await import("!raw-loader!@types/react/index.d.ts");

return typings.default;

}

During the static build, it’s just a fs.readFileSync. Plug the result into the virtual file system, and it Just Works™. Cool.

Recall the goal of being able to link code blocks together? Here’s what I mean. Suppose I’m explaining a concept and introduce some silly function:

function someSillyFunction() {

return "It is silly, isn’t it!";

}

I might want to interrupt myself for a moment to set the stage before demonstrating how I might use this function:

// Hover these, and see that they’re real!

imaginaryObject.sendMessage(someSillyFunction());

Two things are in play here. First, someSillyFunction is being referenced from the previous block. In fact, if you edit the former and rename someSillyFunction or change its return type, then return focus to the latter, you’ll see compiler errors appear.

Second, I never defined imaginaryObject in visible code since it’s not important to the concept I’m trying to demonstrate, but I do need it to compile. (It turns out that aggressively checking example code requires you to get creative with how you write examples that are 100% valid, but still simple and to the point.)

These techniques are signaled by HTML comments and YAML frontmatter in the Markdown source:

--- preambles: file: silly.ts text: "declare var imaginaryObject: { sendMessage: (message: string) => void; }\n" ---

ts function someSillyFunction() { return 'It is silly, isn’t it!'; }

I might want to interrupt myself for a moment to set the stage before demonstrating how I might use this function:

ts // Hover these, and see that they’re real! imaginaryObject.sendMessage(someSillyFunction());

(That got pretty meta, huh?) The matching name field makes the two code blocks get concatenated into a single ts.SourceFile so they’re in the same lexical scope. The preamble field simply adds code to the beginning of the ts.SourceFile that doesn’t get shown in either editor.

Tokenizing with the Language Service

Time to do something with all this infrastructure. There’s not a ton of documentation out there on using the compiler API, so I spent a while fiddling with things, but in the end it came out pretty simple. Once you have a browser-compatible replacement for ts.System, you’re a tiny step away from having a ts.CompilerHost, and from there, a ts.LanguageService follows in short order. When the editor calls tokenize, asking for the text to be split up into annotated ranges, three language service functions are used:

getSyntacticClassificationsprovides a sort of high-level classification of tokens (e.g.className,jsxOpenTagName,identifier), from which I pick the name-y ones to be candidates for “quick info” (the tooltip contents);getSyntacticDiagnostics, which tells me the location and nature of a syntactic error, like putting a curly brace where it doesn’t belong; and finally,getSemanticDiagnostics, which tells me the location and nature of type errors like Object is possibly undefined or Cannot find name 'Recat'.

That’s about it. getSemanticDiagnostics can be a little slow to run on every keypress, so I wait for a few hundred milliseconds of inactivity before reanalyzing the code.

Syntax Highlighting

Here’s the sticky part. The first tokenizer I tried for syntax highlighting was Prism, and it didn’t cut it. Prism may produce perfect results for simple grammars, but complex TypeScript samples just didn’t look right. (Part of this is due to Prism’s inherent limitations; part is due to its rather sparse grammar definition. Other grammars are more complete.) By no means do I intend to disparage anyone else’s work. On the contrary, I knew that Prism doesn’t claim to be perfect, but rather aims to be light, fast, and good enough—I didn’t realize just how much lighter and faster it is for its tradeoffs until seeking a higher fidelity alternative.

Second Attempt: TypeScript

The obvious choice for perfect syntax highlighting, I thought, was the TypeScript compiler itself. I would already have access to it given what I was doing with the language service, and clearly it understands every character of the code in a markedly deeper, more semantic way than any regex engine. Using getSyntacticClassifications again did overall a little better than Prism on its own. Adding extra information from getSemanticClassifications improved things further.

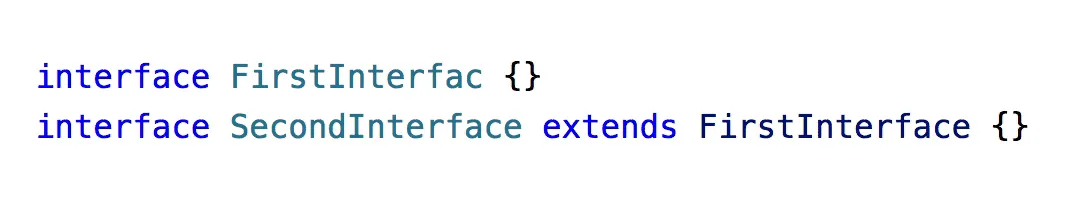

However, one quirk of this approach was that referenced interface names and class names would only be colored as such if they actually existed. For instance:

FirstInterface in the second line becomes variable-colored when the identifier no longer exists. It was kind of interesting, but not necessarily desirable. A little distracting.

Besides, the code still just didn’t look quite right. At this point I started really taking note of how highlighting in real code editors works. Call expressions (e.g., callingAFunction()) are typically highlighted in a different color than other identifiers, and the classification APIs weren’t giving me that information. I tried augmenting their results by walking the AST, but that had a considerable performance impact. I was starting to feel like I could spend countless hours striving for something perfect only to end up with something that not only misses the mark, but is unusably slow too.

Final Attempt: TextMate Grammar

I ultimately decided to try to use the official TypeScript TextMate grammar used by VS Code and Atom. Like the TypeScript lib files, the grammar file contents are retrieved with fs at build time and an async raw-loader import in the browser (only once editing has begun). I found the package VS Code uses to parse the grammar file and tokenize code, and gave that a try.

Now, here’s the most absolutely bananas thing I learned during this whole project: TextMate itself used the regular expression engine Oniguruma (which also happens to be Ruby’s regex engine), so TextMate grammars are written for that engine. It’s a half megabyte binary, and it seems no one has bothered to make a serious attempt at syntax highlighting that works natively in JavaScript. Instead, people have just created node bindings for Oniguruma, and, fortuitously for my attempt to use this in the browser, ported it to Web Assembly.

I have to pause briefly to express my bewildered exasperation. TextMate was great, but why are we jumping through such hoops to keep doing syntax highlighting exactly the way it was done in 2004? It feels like there’s an opportunity in the open source market to make an uncompromisingly good syntax highlighter with pure JavaScript.

Carrying on. The awkwardly but inevitably named Onigasm allows me to achieve the same quality of syntax highlighting in the browser as TextMate, Sublime Text, Atom, and VS Code achieve on your machine. And again, the WASM module isn’t loaded until you start editing—the initial code tokenization is done at build time. What a time to be writing for web! It feels not so long ago that border-radius was too new to be relied upon, and now I’m serving web assembly.

Download size wasn’t the only price to pay for accuracy, though—tokenizing a whole code sample with vscode-textmate was noticeably slower than doing the same with Prism. Onigasm’s author claims Web Assembly imparts a 2x performance penalty compared to running on v8, but it still makes me impressed with how fast VS Code can update the highlighting as you type. I was able to implement an aggressive cache per-line that keeps the overall typing experience from being impacted too much, but you can still see the delay in formatting each time you insert a new character.

Looking Forward

Honestly, I’m probably not going to put much more time and effort into improving the code blocks. They already stretched the anti-goal of being “so complex that I never finish and publish this blog.”

If I do invest any time, though, the next improvement will be to move the tokenizers to web workers. They already work asynchronously, so theoretically that should be within reach. My suspicion is that it will add a few milliseconds more latency to the tokenizing in exchange for making the typing and rendering itself snappier. Tokenizing is currently debounced and/or called in a requestIdleCallback so as to get out of the way of rendering text changes as quickly as possible, but if you type quickly, priorities can still collide easily with everything sharing a single process.

But first, more posts. I made a blog and put my name on it. Guess I’m stuck with it now. 😬